This document describes the support status and in particular the security support status of the Xen branch within which you find it.

Windows paravirtual (PV) drivers are high-performance network and disk drivers that significantly reduce the overhead of the traditional implementation of I/O device emulation. These drivers provide improved network and disk throughput to run fully virtualized Windows guests in an Oracle VM Server for x86 environment.

See the bottom of the file for the definitions of the support status levels etc.

- Release Notes

- RN

2.1 Kconfig

- To install a driver on your target system, unpack the tarball, then navigate to either the x86 or x64 subdirectory (whichever is appropriate), and execute the copy of dpinst.exe you find there with Administrator privilege. More information can be found here. You can also get the drivers via https://xenbits.xen.org/pvdrivers/win/8.2.1.

- Xenvif.tar Windows PV Network Class Driver To install a driver on your target system, unpack the tarball, then navigate to either the x86 or x64 subdirectory (whichever is appropriate), and execute the copy of dpinst.exe you find there with Administrator privilege.

- Jan 28, 2013 Set up a VM with the appropriate drivers. These drivers include the hardware driver for the NIC, as well as drivers to access xenbus, xenstore, and netback. Any Linux distro with dom0 Xen support should do. The author recommends xen-tools (also see xen-tools). You should also give the VM a descriptive name; 'domnet' would be a sensible default.

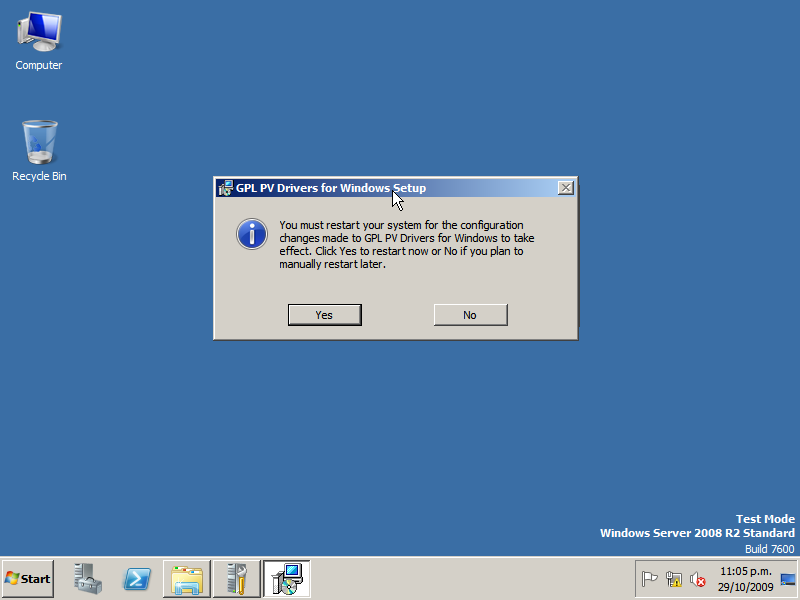

- To boost performance fully virtualized HVM guests can use special paravirtual device drivers to bypass the emulation for disk and network IO. Xen Windows HVM guests can use the opensource GPLPV drivers. See XenLinuxPVonHVMdrivers wiki page for more information about Xen PV-on-HVM drivers for Linux HVM guests.

EXPERT and DEBUG Kconfig options are not security supported. Other Kconfig options are supported, if the related features are marked as supported in this document.

2.2 Host Architecture

2.2.1 x86-64

2.2.2 ARM v7 + Virtualization Extensions

2.2.3 ARM v8

For the Cortex A57 r0p0 - r1p1, see Errata 832075.

2.3 Host hardware support

2.3.1 Physical CPU Hotplug

2.3.2 Physical Memory Hotplug

2.3.3 Host ACPI (via Domain 0)

2.3.4 x86/Intel Platform QoS Technologies

2.3.5 IOMMU

2.3.6 ARM/GICv3 ITS

Extension to the GICv3 interrupt controller to support MSI.

2.4 Guest Type

2.4.1 x86/PV

Traditional Xen PV guest

No hardware requirements

2.4.2 x86/HVM

Fully virtualised guest using hardware virtualisation extensions

Requires hardware virtualisation support (Intel VMX / AMD SVM)

2.4.3 x86/PVH

PVH is a next-generation paravirtualized mode designed to take advantage of hardware virtualization support when possible. During development this was sometimes called HVMLite or PVHv2.

Requires hardware virtualisation support (Intel VMX / AMD SVM).

Dom0 support requires an IOMMU (Intel VT-d / AMD IOMMU).

2.4.4 ARM

ARM only has one guest type at the moment

2.5 Hypervisor file system

2.5.1 Build info

2.5.2 Hypervisor config

2.5.3 Runtime parameters

2.6 Toolstack

2.6.1 xl

2.6.2 Direct-boot kernel image format

Format which the toolstack accepts for direct-boot kernels

2.6.3 Dom0 init support for xl

2.6.4 JSON output support for xl

Output of information in machine-parseable JSON format

2.6.5 Open vSwitch integration for xl

2.6.6 Virtual cpu hotplug

2.6.7 QEMU backend hotplugging for xl

2.6.8 xenlight Go package

Go (golang) bindings for libxl

2.6.9 Linux device model stubdomains

Support for running qemu-xen device model in a linux stubdomain.

2.7 Toolstack/3rd party

2.7.1 libvirt driver for xl

2.8 Debugging, analysis, and crash post-mortem

2.8.1 Host serial console

2.8.2 Hypervisor ‘debug keys’

These are functions triggered either from the host serial console, or via the xl ‘debug-keys’ command, which cause Xen to dump various hypervisor state to the console.

2.8.3 Hypervisor synchronous console output (sync_console)

Xen command-line flag to force synchronous console output.

Useful for debugging, but not suitable for production environments due to incurred overhead.

2.8.4 gdbsx

Debugger to debug ELF guests

2.8.5 Soft-reset for PV guests

Soft-reset allows a new kernel to start ‘from scratch’ with a fresh VM state, but with all the memory from the previous state of the VM intact. This is primarily designed to allow “crash kernels”, which can do core dumps of memory to help with debugging in the event of a crash.

2.8.6 xentrace

Tool to capture Xen trace buffer data

2.8.7 gcov

Export hypervisor coverage data suitable for analysis by gcov or lcov.

2.9 Memory Management

2.9.1 Dynamic memory control

Allows a guest to add or remove memory after boot-time. This is typically done by a guest kernel agent known as a “balloon driver”.

2.9.2 Populate-on-demand memory

This is a mechanism that allows normal operating systems with only a balloon driver to boot with memory < maxmem.

2.9.3 Memory Sharing

Allow sharing of identical pages between guests

2.9.4 Memory Paging

Allow pages belonging to guests to be paged to disk

2.9.5 Alternative p2m

Alternative p2m (altp2m) allows external monitoring of guest memory by maintaining multiple physical to machine (p2m) memory mappings.

2.10 Resource Management

2.10.1 CPU Pools

Groups physical cpus into distinct groups called “cpupools”, with each pool having the capability of using different schedulers and scheduling properties.

2.10.2 Core Scheduling

Allows to group virtual cpus into virtual cores which are scheduled on the physical cores. This results in never running different guests at the same time on the same physical core.

2.10.3 Credit Scheduler

A weighted proportional fair share virtual CPU scheduler. This is the default scheduler.

2.10.4 Credit2 Scheduler

A general purpose scheduler for Xen, designed with particular focus on fairness, responsiveness, and scalability

2.10.5 RTDS based Scheduler

A soft real-time CPU scheduler built to provide guaranteed CPU capacity to guest VMs on SMP hosts

2.10.6 ARINC653 Scheduler

A periodically repeating fixed timeslice scheduler.

Drivers Xen Gpl Pv Driver Developers Network Windows 10

Currently only single-vcpu domains are supported.

2.10.7 Null Scheduler

A very simple, very static scheduling policy that always schedules the same vCPU(s) on the same pCPU(s). It is designed for maximum determinism and minimum overhead on embedded platforms.

2.10.8 NUMA scheduler affinity

Enables NUMA aware scheduling in Xen

2.11 Scalability

2.11.1 Super page support

NB that this refers to the ability of guests to have higher-level page table entries point directly to memory, improving TLB performance. On ARM, and on x86 in HAP mode, the guest has whatever support is enabled by the hardware.

This feature is independent of the ARM “page granularity” feature (see below).

On x86 in shadow mode, only 2MiB (L2) superpages are available; furthermore, they do not have the performance characteristics of hardware superpages.

2.11.2 x86/PVHVM

This is a useful label for a set of hypervisor features which add paravirtualized functionality to HVM guests for improved performance and scalability. This includes exposing event channels to HVM guests.

2.12 High Availability and Fault Tolerance

2.12.1 Remus Fault Tolerance

2.12.2 COLO Manager

2.12.3 x86/vMCE

Forward Machine Check Exceptions to appropriate guests

2.13 Virtual driver support, guest side

2.13.1 Blkfront

Guest-side driver capable of speaking the Xen PV block protocol

2.13.2 Netfront

Guest-side driver capable of speaking the Xen PV networking protocol

2.13.3 PV Framebuffer (frontend)

Guest-side driver capable of speaking the Xen PV Framebuffer protocol

2.13.4 PV display (frontend)

Guest-side driver capable of speaking the Xen PV display protocol

2.13.5 PV Console (frontend)

Guest-side driver capable of speaking the Xen PV console protocol

2.13.6 PV keyboard (frontend)

Guest-side driver capable of speaking the Xen PV keyboard protocol. Note that the “keyboard protocol” includes mouse / pointer / multi-touch support as well.

2.13.7 PV USB (frontend)

2.13.8 PV SCSI protocol (frontend)

NB that while the PV SCSI frontend is in Linux and tested regularly, there is currently no xl support.

2.13.9 PV TPM (frontend)

Guest-side driver capable of speaking the Xen PV TPM protocol

2.13.10 PV 9pfs frontend

Guest-side driver capable of speaking the Xen 9pfs protocol

2.13.11 PVCalls (frontend)

Guest-side driver capable of making pv system calls

2.13.12 PV sound (frontend)

Guest-side driver capable of speaking the Xen PV sound protocol

2.14 Virtual device support, host side

For host-side virtual device support, “Supported” and “Tech preview” include xl/libxl support unless otherwise noted.

2.14.1 Blkback

Host-side implementations of the Xen PV block protocol.

Backends only support raw format unless otherwise specified.

2.14.2 Netback

Host-side implementations of Xen PV network protocol

2.14.3 PV Framebuffer (backend)

Host-side implementation of the Xen PV framebuffer protocol

2.14.4 PV Console (xenconsoled)

Host-side implementation of the Xen PV console protocol

2.14.5 PV keyboard (backend)

Host-side implementation of the Xen PV keyboard protocol. Note that the “keyboard protocol” includes mouse / pointer support as well.

2.14.6 PV USB (backend)

Host-side implementation of the Xen PV USB protocol

2.14.7 PV SCSI protocol (backend)

NB that while the PV SCSI backend is in Linux and tested regularly, there is currently no xl support.

2.14.8 PV TPM (backend)

2.14.9 PV 9pfs (backend)

2.14.10 PVCalls (backend)

PVCalls backend has been checked into Linux, but has no xl support.

2.14.11 Online resize of virtual disks

2.15 Security

2.15.1 Driver Domains

“Driver domains” means allowing non-Domain 0 domains with access to physical devices to act as back-ends.

See the appropriate “Device Passthrough” section for more information about security support.

2.15.2 Device Model Stub Domains

Vulnerabilities of a device model stub domain to a hostile driver domain (either compromised or untrusted) are excluded from security support.

2.15.3 Device Model Deprivileging

This means adding extra restrictions to a device model in order to prevent a compromised device model from attacking the rest of the domain it’s running in (normally dom0).

“Tech preview with limited support” means we will not issue XSAs for the additional functionality provided by the feature; but we will issue XSAs in the event that enabling this feature opens up a security hole that would not be present without the feature disabled.

For example, while this is classified as tech preview, a bug in libxl which failed to change the user ID of QEMU would not receive an XSA, since without this feature the user ID wouldn’t be changed. But a change which made it possible for a compromised guest to read arbitrary files on the host filesystem without compromising QEMU would be issued an XSA, since that does weaken security.

2.15.4 KCONFIG Expert

2.15.5 Live Patching

Compile time disabled for ARM by default.

2.15.6 Virtual Machine Introspection

2.15.7 XSM & FLASK

Compile time disabled by default.

Also note that using XSM to delegate various domain control hypercalls to particular other domains, rather than only permitting use by dom0, is also specifically excluded from security support for many hypercalls. Please see XSA-77 for more details.

2.15.8 FLASK default policy

The default policy includes FLASK labels and roles for a “typical” Xen-based system with dom0, driver domains, stub domains, domUs, and so on.

2.16 Virtual Hardware, Hypervisor

2.16.1 x86/Nested PV

This means running a Xen hypervisor inside an HVM domain on a Xen system, with support for PV L2 guests only (i.e., hardware virtualization extensions not provided to the guest).

This works, but has performance limitations because the L1 dom0 can only access emulated L1 devices.

Xen may also run inside other hypervisors (KVM, Hyper-V, VMWare), but nobody has reported on performance.

2.16.2 x86/Nested HVM

This means providing hardware virtulization support to guest VMs allowing, for instance, a nested Xen to support both PV and HVM guests. It also implies support for other hypervisors, such as KVM, Hyper-V, Bromium, and so on as guests.

2.16.3 vPMU

Virtual Performance Management Unit for HVM guests

Disabled by default (enable with hypervisor command line option). This feature is not security supported: see https://xenbits.xen.org/xsa/advisory-163.html

2.16.4 Argo: Inter-domain message delivery by hypercall

2.16.5 x86/PCI Device Passthrough

Only systems using IOMMUs are supported.

Not compatible with migration, populate-on-demand, altp2m, introspection, memory sharing, or memory paging.

Because of hardware limitations (affecting any operating system or hypervisor), it is generally not safe to use this feature to expose a physical device to completely untrusted guests. However, this feature can still confer significant security benefit when used to remove drivers and backends from domain 0 (i.e., Driver Domains).

2.16.6 x86/Multiple IOREQ servers

An IOREQ server provides emulated devices to HVM and PVH guests. QEMU is normally the only IOREQ server, but Xen has support for multiple IOREQ servers. This allows for custom or proprietary device emulators to be used in addition to QEMU.

2.16.7 ARM/IOREQ servers

2.16.8 ARM/Non-PCI device passthrough

Note that this still requires an IOMMU that covers the DMA of the device to be passed through.

2.16.9 ARM: 16K and 64K page granularity in guests

No support for QEMU backends in a 16K or 64K domain.

2.16.10 ARM: Guest Device Tree support

2.16.11 ARM: Guest ACPI support

2.16.12 Arm: OP-TEE Mediator

2.17 Virtual Hardware, QEMU

This section describes supported devices available in HVM mode using a qemu devicemodel (the default).

Note that other devices are available but not security supported.

2.17.1 x86/Emulated platform devices (QEMU):

2.17.2 x86/Emulated network (QEMU):

2.17.3 x86/Emulated storage (QEMU):

See the section Blkback for image formats supported by QEMU.

2.17.4 x86/Emulated graphics (QEMU):

2.17.5 x86/Emulated audio (QEMU):

2.17.6 x86/Emulated input (QEMU):

2.17.7 x86/Emulated serial card (QEMU):

2.17.8 x86/Host USB passthrough (QEMU):

2.17.9 qemu-xen-traditional

The Xen Project provides an old version of qemu with modifications which enable use as a device model stub domain. The old version is normally selected by default only in a stub dm configuration, but it can be requested explicitly in other configurations, for example in xl with device_model_version='QEMU_XEN_TRADITIONAL'.

qemu-xen-traditional is security supported only for those available devices which are supported for mainstream QEMU (see above), with trusted driver domains (see Device Model Stub Domains).

2.18 Virtual Firmware

2.18.1 x86/HVM iPXE

Booting a guest via PXE.

PXE inherently places full trust of the guest in the network, and so should only be used when the guest network is under the same administrative control as the guest itself.

2.18.2 x86/HVM BIOS

Booting a guest via guest BIOS firmware

2.18.3 x86/HVM OVMF

OVMF firmware implements the UEFI boot protocol.

This file contains prose, and machine-readable fragments. The data in a machine-readable fragment relate to the section and subsection in which it is found.

Drivers Xen Gpl Pv Driver Developers Network & Wireless Cards Free

The file is in markdown format. The machine-readable fragments are markdown literals containing RFC-822-like (deb822-like) data.

In each case, descriptions which expand on the name of a feature as provided in the section heading, precede the Status indications. Any paragraphs which follow the Status indication are caveats or qualifications of the information provided in Status fields.

3.1 Keys found in the Feature Support subsections

3.1.1 Status

This gives the overall status of the feature, including security support status, functional completeness, etc. Refer to the detailed definitions below.

If support differs based on implementation (for instance, x86 / ARM, Linux / QEMU / FreeBSD), one line for each set of implementations will be listed.

3.2 Definition of Status labels

Each Status value corresponds to levels of security support, testing, stability, etc., as follows:

3.2.1 Experimental

3.2.2 Tech Preview

3.2.2.1 Supported

3.2.2.2 Deprecated

All of these may appear in modified form. There are several interfaces, for instance, which are officially declared as not stable; in such a case this feature may be described as “Stable / Interface not stable”.

3.3 Definition of the status label interpretation tags

3.3.1 Functionally complete

Does it behave like a fully functional feature? Does it work on all expected platforms, or does it only work for a very specific sub-case? Does it have a sensible UI, or do you have to have a deep understanding of the internals to get it to work properly?

3.3.2 Functional stability

What is the risk of it exhibiting bugs?

General answers to the above:

Here be dragons

Pretty likely to still crash / fail to work. Not recommended unless you like life on the bleeding edge.

Quirky

Mostly works but may have odd behavior here and there. Recommended for playing around or for non-production use cases.

Normal

Ready for production use

3.3.3 Interface stability

If I build a system based on the current interfaces, will they still work when I upgrade to the next version?

Not stable

Interface is still in the early stages and still fairly likely to be broken in future updates.

Provisionally stable

We’re not yet promising backwards compatibility, but we think this is probably the final form of the interface. It may still require some tweaks.

Stable

We will try very hard to avoid breaking backwards compatibility, and to fix any regressions that are reported.

3.3.4 Security supported

Will XSAs be issued if security-related bugs are discovered in the functionality?

If “no”, anyone who finds a security-related bug in the feature will be advised to post it publicly to the Xen Project mailing lists (or contact another security response team, if a relevant one exists).

Bugs found after the end of Security-Support-Until in the Release Support section will receive an XSA if they also affect newer, security-supported, versions of Xen. However, the Xen Project will not provide official fixes for non-security-supported versions.

Three common ‘diversions’ from the ‘Supported’ category are given the following labels:

Supported, Not security supported

Functionally complete, normal stability, interface stable, but no security support

Supported, Security support external

This feature is security supported by a different organization (not the XenProject). See External security support below.

Supported, with caveats

This feature is security supported only under certain conditions, or support is given only for certain aspects of the feature, or the feature should be used with care because it is easy to use insecurely without knowing it. Additional details will be given in the description.

3.3.5 Interaction with other features

Not all features interact well with all other features. Some features are only for HVM guests; some don’t work with migration, &c.

3.3.6 External security support

The XenProject security team provides security support for XenProject projects.

We also provide security support for Xen-related code in Linux, which is an external project but doesn’t have its own security process.

External projects that provide their own security support for Xen-related features are listed below.

QEMU https://wiki.qemu.org/index.php/SecurityProcess

Libvirt https://libvirt.org/securityprocess.html

FreeBSD https://www.freebsd.org/security/

NetBSD http://www.netbsd.org/support/security/

OpenBSD https://www.openbsd.org/security.html

Xen Project PVHVM drivers for Linux HVM guests

This page lists some resources about using optimized paravirtualized PVHVM drivers (also called PV-on-HVM drivers) with Xen Project fully virtualized HVM guests running (unmodified) Linux kernels. Xen Project PVHVM drivers completely bypass the Qemu emulation and provide much faster disk and network IO performance.

Note that Xen Project PV (paravirtual) guests automatically use PV drivers, so there's no need for these drivers if you use PV domUs! (you're already automatically using the optimized drivers). These PVHVM drivers are only required for Xen Project HVM (fully virtualized) guest VMs.

Xen Project PVHVM Linux driver sources:

- Upstream vanilla kernel.org Linux 2.6.36 kernel and later versions contain Xen Project PVHVM drivers out-of-the-box!

- Jeremy's pvops kernel in xen.git (branch: xen/stable-2.6.32.x) contains the new PVHVM drivers. These drivers are included in upstream Linux kernel 2.6.36+. See XenParavirtOps wiki page for more information about pvops kernels.

- Easy-to-use 'old unmodified_drivers' PV-on-HVM drivers patch for Linux 2.6.32 (Ubuntu 10.04 and other distros): http://lists.xensource.com/archives/html/xen-devel/2010-05/msg00392.html

- Xen Project source tree contains 'unmodified_drivers' directory, which has the 'old' PV-on-HVM drivers. These drivers build easily with Linux 2.6.18 and 2.6.27, but require some hackery to get them build with 2.6.3x kernels (see below for help).

Xen Project PVHVM drivers in upstream Linux kernel

Xen Project developers rewrote the PVHVM drivers in 2010 and submitted them for inclusion in upstream Linux kernel. Xen Project PVHVM drivers were merged to upstream kernel.org Linux 2.6.36, and various optimizations were added in Linux 2.6.37. Today upstream Linux kernels automatically and out-of-the-box include these PVHVM drivers.

List of email threads and links to git branches related to the new Xen PV-on-HVM drivers for Linux:

These new Xen Project PVHVM drivers are also included in Jeremy's pvops kernel xen.git, in branch 'xen/stable-2.6.32.x' . See XenParavirtOps wiki page for more information.

There's also a backport of the Linux 2.6.36+ Xen Project PVHVM drivers to Linux 2.6.32 kernel, see these links for more information:

- and the git branch: http://xenbits.xen.org/gitweb?p=people/sstabellini/linux-pvhvm.git;a=shortlog;h=refs/heads/2.6.32-pvhvm .

Xen Project PVHVM drivers configuration example

In dom0 in the '/etc/xen/<vm>' configuration file use the following syntax:

With this example configuration when 'xen_platform_pci' is enabled ('1'), the guest VM can use optimized PVHVM drivers: xen-blkfront for disk, and xen-netfront for network. When 'xen_platform_pci' is disabled ('0'), the guest VM will use Xen Qemu-dm emulated devices: emulated IDE disk and emulated intel e1000 nic.

If you need full configuration file example for PVHVM see below.

Enable or disable Xen Project PVHVM drivers from dom0

Drivers Xen Gpl Pv Driver Developers Network & Wireless Cards Online

In the configuration file for the Xen Project HVM VM ('/etc/xen/<vm>') in dom0 you can control the availability of Xen Platform PCI device. Xen Project PVHVM drivers require that virtual PCI device to initialize and operate.

To enable Xen Project PVHVM drivers for the guest VM:

To disable Xen Project PVHVM drivers for the guest VM:

'xen_platform_pci' setting is available in Xen 4.x versions. It is NOT available in the stock RHEL5/CentOS5 Xen or in Xen 3.x.

Linux kernel commandline boot options for controlling Xen Project PVHVM drivers unplug behaviour

When using the optimized Xen Project PVHVM drivers with fully virtualized Linux VM there are some kernel options you can use to control the 'unplug' behaviour of the Qemu emulated IDE disk and network devices. When using the optimized devices the Qemu emulated devices need to be 'unplugged' in the beginning of the Linux VM boot process so there's no risk for data corruption because both the Qemu emulated device and PVHVM device being active at the same time (both provided by the same backend).

TODO: List the kernel cmdline options here.

Tips about how to build the old 'unmodified_drivers' with different Linux versions

- With Linux 2.6.32: http://lists.xensource.com/archives/html/xen-devel/2010-04/msg00502.html

- With Linux 2.6.27: http://wp.colliertech.org/cj/?p=653

- With arbitrary kernel version (custom patch): http://blog.alex.org.uk/2010/05/09/linux-pv-drivers-for-xen-hvm-building-normally-within-an-arbitrary-kernel-tree/

Some Linux distributions also ship Xen Project PVHVM drivers as binary packages

- RHEL5 / CentOS 5

- CentOS 6

- SLES 10

- SLES 11

- OpenSUSE

- Linux distros that ship with Linux 2.6.36 or later kernel include Xen Project PVHVM drivers in the default kernel.

You might also want to check out the XenKernelFeatures wiki page.

Verifying Xen Project Linux PVHVM drivers are using optimizations

If you're Using at least Xen Project 4.0.1 hypervisor and the new upstream Linux PVHVM drivers available in Linux 2.6.36 and later versions, follow these steps:

Drivers Xen Gpl Pv Driver Developers Network Topology

- Make sure you're using the PVHVM drivers.

- Add 'loglevel=9' parameter for the HVM guest Linux kernel cmdline in grub.conf.

- Reboot the guest VM.

- Check 'dmesg' for the following text: 'Xen HVM callback vector for event delivery is enabled'.

Some distro kernels, or a custom kernel from xen/stable-2.6.32.x git branch might have these optimizations available aswell.

Example HVM guest configuration file for PVHVM use

Example configuration file ('/etc/xen/f16hvm') for Xen Project 4.x HVM guest VM using Linux PVHVM paravirtualized optimized drivers for disks and network:

This example has been tested and is working on Fedora 16 Xen Project dom0 host using the included Xen Project 4.1.2 and Linux 3.1 kernel in dom0, and Fedora 16 Xen Project PVHVM guest VM, also using the stock F16 Linux 3.1 kernel with the out-of-the-box included PVHVM drivers.

Verify Xen Project PVHVM drivers are working in the Linux HVM guest kernel

Run 'dmesg | egrep -i 'xen|front' in the HVM guest VM. This example is from Fedora 16 PVHVM guest Linux 3.1 kernel. You should see messages like this:

Especially the following lines are related to Xen Project PVHVM drivers:

Here you can see the Qemu-dm emulated devices are being unplugged for safety reasons. Also 'xen-blkfront' paravirtualized driver is being used for the block device 'xvda', and the xen-netfront paravirtualized network driver is being initialized.

Verify network interface 'eth0' is using the optimized paravirtualized xen-netfront driver:

driver 'vif' means it's a Xen Virtual Interface paravirtualized driver.

Also you can verify from '/proc/partitions' file that your disk devices are called xvd* (Xen Virtual Disk) so you're using the optimized paravirtualized disk/block driver:

Using Xen Project PVHVM drivers with Ubuntu HVM guests

At least Ubuntu 11.10 does NOT include xen platform PCI driver as built-in to the kernel, so if you want to use Xen Project PVHVM drivers you need to add 'xen-platform-pci' driver in addition to 'xen-blkfront' to the initramfs image. Note that the Xen platform PCI driver is required for Xen Project PVHVM to work.

Using Xen PVHVM drivers with Ubuntu 11.10:

- Add 'xen-platform-pci' to file '/etc/initramfs-tools/modules'.

- Re-generate the kernel initramfs image with: 'update-initramfs -u'.

- Make sure '/etc/fstab' is mounting partitions based on Label or UUID. Names of the disk devices will change when using PVHVM drivers (from sd* to xvd*). This doesn't affect LVM volumes.

- Set 'xen_platform_pci=1' in '/etc/xen/<ubuntu>' configfile in dom0.

- Start the Ubuntu VM and verify it's using PVHVM drivers (see above).

Drivers Xen Gpl Pv Driver Developers Network & Wireless Cards Download

Performance Tradeoffs

Drivers Xen Gpl Pv Driver Developers Network Setup

- For workloads that favor PV MMUs, PVonHVM can have a small performance hit compared to PV.

- For workloads that favor nested paging (in hardware e.g. Intel EPT or AMD NPT), PVonHVM performs much better than PV.

- Best to take a close look and measure your particular workload(s).

- Follow trends in hardware-assisted virtualization.

- 64bit vs. 32bit can also be a factor (e.g. in Stefano's benchmarks, linked to below, 64bit tends to be faster, but it's always best to actually do the measurements.

- More benchmarks very welcome!

Drivers Xen Gpl Pv Driver Developers Network & Wireless Cards -

Take a look at Stefano's slides and Xen Summit talk: